@kkvasan92: AXI is not required/desired for simulation/emulation.

- Queries

- All Stories

- Search

- Advanced Search

Advanced Search

Mar 23 2017

@anil: With Link Training we refer to training the LVDS connection required for the Gearwork.

@mehrikhan36 is this your GSoC project proposal?

Yes, we have IRC, it is on #apertus @ irc.freenode.

Just join there and ask if you need anything.

@sagnikbasu: Sorry for the delay, I obviously missed your questions.

Mar 21 2017

Hi

I am Interested in this project.

Can you please elaborate on the project goals.

In the Goals, you mentioned Link training; are you referring to Link training, which is added in HDMI 2.1 spec?

Mar 18 2017

Some points related to the 2nd part of this task [The software controlling the fan]

Mar 17 2017

A useful resource for pipelined convolution implementation: https://daim.idi.ntnu.no/masteroppgaver/013/13656/masteroppgave.pdf

Mar 16 2017

hello do we have IRC for apertus for gsoc's 2017 projects?

Mar 14 2017

I will start sending my solutions here: https://drive.google.com/open?id=0B4vrlXPPuUghVU5qaHFwR2NWYjA

Elbehery did a great job and implemented the I2C module. I wrote a simplified but functional iteration of PWM generator and now, I want to start working at the PID controller.

Mar 13 2017

Hey @Bertl, just implemented the top module including the PWM interface, and so far the module seems to work based on the test bench results. Let me know your feedback,

Here is a link to a simple flowchart: https://github.com/sagniknitr/Real-time-sobel-filter-in-FPGA/blob/master/gsoc2.jpg

Regarding hardware emulation, can you please tell about interfaces

AXI can be used as input stream interface for hardware emulation model assuming this emulation sensor model going to be used in same fpga where main system going to be implemented?

Thanks a lot for clarifying. I am interested in this project.

i need some more clarification

Some points regarding sensor and battery supply specifications:

Also, in addition to the above post, I would like to know about the various signals which the camera sensor (Truesense KAC12040 or Cmosis CMV!2000) data bus will provide?

Hi,

Here some of my queries regarding this project.

->What kind of edges does the camera require? What if the algorithm is developed if it only shows the edges when the camera is in motion? When the user is stationary it will store the edge information in memory, so that it will not have to do any processing.I think this may save computation time.

Mar 12 2017

Bi-directional communication between ZYNQ and MachXO2 over a single LVDS pair (half duplex) in one case and over two LVDS pairs (full-duplex) in the other case.

Note that there is also a single ended clock line which can (and probably should :) be used for synchronization.

I don't think that this needs to be Vendor specific in any way.

I.e. you can use the Xilinx Vivado toolchain or any other (Altera Quartus, Lattice Diamond, ...) for testing and simulation, but the resulting HDL should be vendor independent.

Hi.

So I read the description of this project, I assume that the bi-directional communication protocol to be implemented in both the ZYNQ and Machxo2 side. In case of packet-oriented communication, should we implement an architecture similar to the UDP protocol, which I think will be best for large bandwidth video data?

Mar 11 2017

Hi Mr Bertl,

Can i assume that xilinx tool flow will be used for hardware emulation As Xilinx zynq 7020 is used in AXIOM camera. uncompressed video or image data will be placed in memory and sensor model should generate output bit stream for that image/video according to timing and functional standard of the sensor, am i correct? is it possible to use available IPs ( opencore / xilinx) if it can be used?

Mar 10 2017

I had in mind techniques that could be used for blurring, sharpening, un-sharpening, embossing, etc. I am not sure if any of these are already implemented, but my idea is to develop a common module in Verilog that can accept kernel of any size (e.g, 3*3 or 5*5) and produce the desired effect on image. For example, the user could input a kernel of size 5*5 to produce Gaussian blur.

- I had in mind techniques that could be used for blurring, sharpening, un-sharpening, embossing, etc. I am not sure if any of these are already implemented, but my idea is to develop a common module in Verilog that can accept kernel of any size (e.g, 3*3 or 5*5) and produce the desired effect on image. For example, the user could input a kernel of size 5*5 to produce Gaussian blur.

Hey @Bertl, two days ago i asked if i can post a simple prototype for the I2C slave and you said its okay.

Mar 9 2017

First, I am wondering if the mentors would be open to the implementation of other techniques (in addition to Sobel Filters) that are useful in film making?

I am a final year undergraduate student at National University of Sciences and Technology, with a major in Electrical Engineering. I participated in GSOC last year with TimeLab organisation and developed a time series simulator in Python.

I have a particular interest in parallel hardware programming. Recently, as a part of my semester project, I implemented a pipelined version of Convolutional Neural Network on FPGA using Verilog.

Mar 8 2017

Yes, if we extend it a bit, just creating a simple daemon for now. The log is written to /var/log/syslog in my VM. You can see the init for log in the source i've uploaded yesterday, jsut take a look at main.cpp. Thanks for reminding me of return values, as we also want to request set values to display/verify them.

@anuditverma

No, you don't need to know about django, you only need to know about unit testing. Feel free to consult another resource of your liking.

Just my idea: As the daemon is planned for user-space first, it would be not a big problem to test on local machine. Actually developing it currently on LinuxMint with Unix domain sockets which are meant just for local usage, other languages need wrappers of course to be able to use commands which came over network. Don't want to allow to access the daemon over network for security purpose at the moment.

Since the task has been updated, I have been following the resources present on it, also I am getting my self acquainted with the prerequisites too.

One of the resource link is leading to a O'reilly book "Test-Driven Development with Python" and it is advised to read 1-4 chapters from it, the book contains py and django based app development content.

Mar 6 2017

Oh alright I see, thanks for the heads up @maltefiala, then I should certainly focus on other aspects of this project.

Dear @anuditverma, it's great you want to take a look at the current examples. Just bear in mind that the camera hardware has not been virtualised so far, so you won't be able to get useful replies when running the scripts on your raspberry. The latest firmware is here: http://vserver.13thfloor.at/Stuff/AXIOM/BETA/beta_20170109.dd.xz

Thanks @maltefiala for the update and for the application reminder, I am looking forward to apply. I will go through this updated task once again to get a more clear understanding to make my basic fundamentals more strong.

@anuditverma, we finished updating this task. If you are still interested, please apply with a timeline proposal until April 3 16:00 UTC. If you have any further question, feel free to ask.

Mar 4 2017

Mar 3 2017

Mar 2 2017

@anuditverma, thanks a lot for your detailed answer. I will update this task as soon as we have improved the onboarding process.

Thanks for your interest in my work, so here are my views to your queries,

We are currently improving the onboarding process (documentation, tools,..) for this task. I am positive we can give more information on monday. Regarding dates and times: Most of our team lives in MET (UTC +1). We will switch to Daylight saving time (UTC+2) on the 26th of March.

Mar 1 2017

someone at natron.fr is probably also working on an waveform monitor https://forum.natron.fr/t/luma-waveform-display-using-shadertoy/985

Hi again, thanks for the reply Andrej and yes let's see what others have in their mind, meanwhile I will try the example you have mentioned and try to learn more about the project idea itself.

Thanks again, I hope to make some progress very soon.

Feb 28 2017

Hello and welcome,

Hi,

I am Anudit Verma, a final year CS undergrad from University School of Information, Communication and Technology (USICT), Delhi, India. I am an avid open source enthusiast and a contributor, always interested in learning something new while contributing significantly to the open source community. I have worked earlier on a GSoC project with CiviCRM, in helping them integrate OSDI API into their system, my project can be seen here. I did most of my project's work in PHP, along with that it include working with JSON & XML data, I also know C/C++, haven't used Google's Protocol Buffers though, but I am very much interested in this project, I might learn something new and exciting. You can know more about me at anudit.in/aboutme

I followed the discussion on this thread, so I am also aware of the fact that we are currently focusing more on the project's tasks and discussing less about which language to be used in order to complete this project, but I have a small doubt here, so is it upon a student to choose the language to go with, which is the part of his skill set ? I completely understand the fact that we are not choosing the ease of code-bility of a student in any language rather we could set a specific language so as to optimise the project itself at the end. Could you please throw more light in this regard ? Also it would be great if you can provide some links of the resources from where I can learn more about the code base of Apertus and start my contributions.

You still shouldn't forget, that ARM processors have not infinite resources to process things. If it's possible we should use JIT versions of languages or ones optimized for ARM. Had just a glimpse at it on google while lunch break at work.

All languages mentioned are well tested, providing a rich environment.

A word of caution, just because some language is hip at the moment, doesn't mean that the performance or feature set is suited for embedded systems. It should be evaluated first. I still vote for C/C++ for the backend, it maybe complex for some people, but it's giving the most performance (usually).

This is what I find confusing, alas me recommending the renaming to "AXIOM Beta Webinterface".

I would also like to add that there is no exclusivity with APIs. We can do PHP, python, nodejs, go and bash, etc. APIs and it will make users and developers the happier the more options there are.

Backend language

Dear @all,

after talking with Sebastion today, I would recommend restructuring the task as follow:

Feb 21 2017

Feb 20 2017

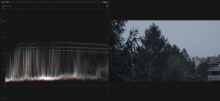

Above (top right) is an early crude waveform produced from data from the Axiom Beta. The larger waveform is from a recording at 1080p as viewed on DaVinci Resolve, which includes the Axiom Beta overlay.

A good example ist the waveform of Final Cut Pro X, it has color information merged into the luma based waveform. You can watch it here at minute 3:10 youtube

Feb 17 2017

Feb 13 2017

Feb 11 2017

Maybe related: T736