Right. Except motion blur frame artifacting.

- Queries

- All Stories

- Search

- Advanced Search

Advanced Search

Jan 24 2015

I believe frame averaging doesn't have any motion artefact problems - it's effectively the same as taking one frame of 1/50th second exposure - but temporal noise is effectively lost in the averaging. Fixed pattern noise remains (although a black balance can deal with a lot of that. The main drawback is a significantly reduced ISO.

And motion artifacting?

Given that the sensor's data is effectively unknown, a “preview” could be interpreted to mean a few different things:

- A generic 709 that correctly transforms the sensor's data into meaningful values. That is, it requires defining the primaries relative to the sensor in relation to 709 / sRGB, hence the need for a 3D LUT which is the only method to adjust the saturation intents.

- A more creative 709 view that might offer slightly crunchier blacks and a softer rolloff. Some vendors take a little creative liberty with their 709 output. Still requires as 3D LUT.

- A unique preview LUT, or series of LUTs, as designed by a cinematographer for an estimation of looks for lighting usage on set. Would require a primaries transform to whatever display type chosen, and therefore 3D.

Jan 23 2015

I think it's probably not worth it, no? Maybe just work on better processing ;-)

Response from Cmosis:

Jan 19 2015

I'm willing to give it a go. Who do I contact?

The Test Pattern is precisely that, a pattern which is sent from the sensor _instead_ of the actual image.

My preference (as stated on IRC):

Status: the current only problem with the current compilation of u-boot seems to be that the uenv.txt is not loaded by default. It did require a minor change to the uenv.txt.

Jan 18 2015

Ah thanks for bringing this a task to the lab, yes we discussed it with Alex and it seems to have a very high potential for increasing dynamic range and reducing noise.

Ah, yes. I suppose you would!

I wouldn't call it simple as we have to implement a completely different debayering algorithm but its technically possible.

Okay, so a 16mm crop area would be simple enough to implement then. It might be worth using the line skip as well since this would increase the max frame rate at the same time.

As per sensor datasheet you can specify to skip lines, not columns.

Jan 16 2015

working here : https://lite5.framapad.org/p/eLstD0Zxif

Jan 13 2015

The Elphel PHP is a great reference here: http://wiki.elphel.com/index.php?title=PHP_in_Elphel_cameras

Jan 10 2015

Although Alex at Magic Lantenr seemed to indicate that the black reference collums are too small to do much to reduce the noise: http://www.magiclantern.fm/forum/index.php?topic=11787.0

Here's the bit I was thinking about:

I rolled my own devicetree for the ZedBoard, it might make sense to do so for the MicroZed as well.

Also some devices will not be required in the kernels (because the hardware is not there) and others might need to be enabled (because the default is to disable them)

So first of all, the uenv.txt issue will be solved by the description Sven gave. Needs a recompiled u-boot, we can handle that.

QSPI is not accessible under linux with the image:

[ 0.746324] zynq-qspi e000d000.ps7-qspi: pclk clock not found.

[ 0.750826] zynq-qspi: probe of e000d000.ps7-qspi failed with error -2

We should really move that to a faster medium, please ping me on IRC in the future if you need to know something to avoid further delays.

Jan 8 2015

Yes, I'm not sure how it works. There are some pixels on the Cmosis chip set aside as black reference I believe but I'd need to look through the data sheet to find them.

Jan 7 2015

The black shading between each frame is indeed interesting, but it would mean the sensor can capture data without "opening" the (electronic) shutter.

What does it even mean in the context of an electronic shutter?

I think both Cmosis and Alex from Magic lantern should be able to help with this.

Some info on black shading in Magic lantern http://www.magiclantern.fm/forum/index.php?topic=9861.0

"black shading" sounds interesting, please elaborate what it is and how it is done.

Jan 6 2015

The chip has a programable window mode is that means he chip will only read the centre 1080 lines - but I think they are spread across the full width of 4096 pixels - I'm not sure if you can specify a window that has a shorter line length, but perhaps Cmosis could let you know. If you can get the chip to only read out that window it would make processing a lot easier. Or perhaps it's simple enough to disregard the first and last 1,088 pixels per line. One added advantage would be a high max frame rate a la Red.

Jan 5 2015

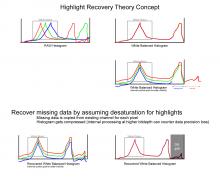

Highlight recovery is only of use when recording debayered (non-raw) images since highlight recovery can be carried out in post on raw data. However the process itself needs to be carried out on the Raw data stream itself.

As I understand it, scope for this is debayered data. Raw (post) processing could use any other (more clever) strategy to enhance highlights, something we don't want / can't afford to do in-camera.

@Bertl: The idea is that the data, being captured in a scene-referred / sensor linear manner, becomes occluded near the top end. I am not entirely sure this applies to the current design, but I will outline the general concept.

Jan 4 2015

I'd put this into the serious consideration category - the dynamic range of the chip is already somewhat restricted - so any in camera processing that can increase dynamic range at the top end or reduce noise in the shadow areas would definitely be welcome.

I don't see why not - you could simply disable one of the HDMI 1.0 ports when running the HDMI 1.4 port 'hot' (as it were) - i.e. If it is used for Raw, 4K or high speed output. If it's just used for HD only the bandwidth would be the same as if it were HDMI 1.0 - but you'd have the extra bandwidth when needed and the extra output when you don't - surely it's the most flexible option?

Maybe this post by Alex from ML can shed some light: https://groups.google.com/forum/#!topic/ml-devel/3qTOXhj5FA8

The explanation in the link doesn't make sense to me, please give an example for discrete values (e.g. an 8 bit range)

We have about 16Gbit/s bandwidth to the shield, doing the math explains why this isn't really "easier" :)

Jan 3 2015

It might be easier to produce just one shield that has one 1.4b HDMI (for high speed or 4K) and two 1.0 ports for monitoring.

We plan to have two different HDMI shields, one with 3 ports and one with a single port (maybe 2 ports if we have enough bandwidth) which will allow for higher pixel clocks and thus larger formats.

Jan 2 2015

summary from talking to a VFX guy specialized in motion capture and studio real time camera tracking:

Alternatively it might be better to save the high speed and Raw modes for SDI shields as these seem to allow much higher data rates even with just 3G SDI (let alone 6G or 12G).

Those sound plausible to me. However, I do want to throw one thing out there: the Atomos Shogun has an HDMI 1.4b board in it. According to the specs this allows it to accept 4K HDMI signals and HD signals at up to 120fps.

By all means, if it can be managed why not do it - there's a lot to be said for doing something that people are used to. I was simy pointing out that blocks of colour might be easier to implement.

I imagine it would be easier to show Zebra areas as blocks of a single colour. Then all you'd need to do is identify any pixels whose luma values fall within a selected range and change them to the selected colour.

Additional 'zebra patterns' could then be added with different colours. So you have one set of values represented by, for example dark purple (deep shadow) and another set by red (white clip).

One thing I realised last night was that there is no particular need these days to have the 'zebras' displayed as an actual zebra pattern (the stripes). The only reason they were originally displayed as stripes was because the first video cameras only had black and white viewfinders hence there was no other way to display this relevant information.

Not sure why my previous comment was removed. I was simply pointing out the ideal set of features. As a general rule I don't think anyone is likely to have a problem having one feed permanently clean for recording purposes.

Lets first create a usage scenario list how the 3 outputs might be used (even the wild configurations):

Adding a complete image path/feature cross-switch would be possible but very expensive FPGA resource wise, so I would prefer to select in advance which path can apply what features.

For example, if we dedicate one output for recording, that this output shouldn't have to handle any features or overlays.

Adding a complete image path/feature cross-switch would be possible but very expensive FPGA resource wise, so I would prefer to select in advance which path can apply what features.

I think the first thing to consider is that there are multiple image pathways possible on the camera. So one output may be a log or even raw image stream, while another may show a monitoring LUT (e.g. Log to Rec709) so the user needs to be able to select which stream they want the Zebra to be applied to but also to select which stream they want the zebra to be calculated from.

Dec 31 2014

from magic lantern (http://wiki.magiclantern.fm/userguide):

One clear rule is to always show clipping as recorded.

What would be the best way to 'clear' my configuration so I can observe the behavior you are having? You did select the dipswitch of booting from SD?

no, it doesn't because it seems to execute the wrong script.

Dec 30 2014

Please, if possible, provide some examples for good/bad features so that we can avoid making the same mistakes others made and strive for the "best possible" implementation :)

I find the 'sharpening' approach less helpful than the coloured edges. The former method often leads me feeling a little concerned, whereas the coloured edges leave you in no doubt.

Yes and no. One traditional use of Zebra patterns was to set correct skin tone exposure levels - roughly 67 IRE in Rec 709 Colour space - if this capability was lost (as the zebras became channel specific only) I think many people would be more than a little annoyed as they rely on it for correct exposure.

Dec 29 2014

Is it possible (and thus easier) to see this as extreme sharpening applied to the image? On the newer red cameras it looks more like sharpening, and it's very effective to do the focusing.

But what I was more interested about: does the image "autoboot"?

So what has changed between the last and this revision? Mainly the automatic starting of the ethernet interface and run DHCP.

For me it would make most sense to check each channel in the raw bayer data for over/under a certain threshold (which could be different/weighted) as the main idea is to prevent information loss from clipping, no?

For me it would make most sense to check each channel in the raw bayer data for over/under a certain threshold (which could be different/weighted) as the main idea is to prevent information loss from clipping, no?

There might be ways to simplify/optimize the processing, after all we just need a rough helper indicator here not a scientific tool.

yup

Just to sum up, the given algorithms are quite complex and require several rows (min 3) of data.

thinking about it now it might also make sense to add "dot size" as parameter as most monitors with lower than FullHD resolution might not be able to display dots of 1 pixel size properly

stripe color/direction added to parameter list.

Direction of the stripes?

Color of the stripes?

Would be great if we could find someone interested in testing this on the Alpha!

Here is a great reference: http://www.luminous-landscape.com/forum/index.php?topic=56246.0

Maybe test it out on the Alpha?

Please define "image frequency" and explain how it is calculated from the raw Bayer data.

Dec 26 2014

Is this on the 7020?

Indeed. How about making this feature work only when the focus peaking is concentrated in a given area, and not spread out throughout the whole image? Or, how about making it optional? Then people just activate it when they're shooting something that will really benefit from this feature, like when trying to pull focus on people on the foreground?

Just ideas.

Judging from my experience with "smart" devices (including consumer cameras, phones, tablets), such a "guessing" will consume a lot of resources and get it right in at most 80% of all cases and will annoy in the remaining 20%, because it just can't get it right there. Note that the 20% will be the ones everybody talks about :)

Problem seems to be power consumption related.

I got it to boot after applying additional power (i.e. the USB power is insufficient).