I want to evaluate if this is possible to do in the FPGA in realtime.

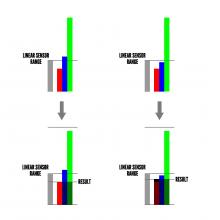

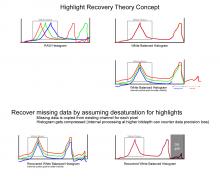

In a nutshell highlight recovery works as one channel typically clip at higher values than the others because whitebalance gain has been applied, the values of the other channels are guessed in that area to recover the highlights (the reconstruction assumes they are white).

As with all features it would be great if it could be turned on/off and if the "aggressiveness" of the recovery can be tuned with several values.

I would classify this feature wish as non essential but nice to have to increase dynamic range once everything else is working.

Details: http://www.colormancer.com/whitepapers/white-balance/free-white-balance-plugin.htm