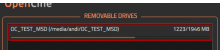

Dan noted that often you want to backup an entire magazine/drive no matter what the file structure is on it and just create an 100% identical clone of it.

This should be one backup mode. it could be called "clone mode" or "1:1 backup" ?

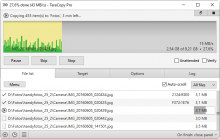

Another mode where the process is more interactive is this concept for a "Tabbed View Mode". Often you backup partly filled record media and after the backup is complete you decide to not delete the record media as there is still space left. Next time you back up you realize you have to figure out what you already backed up and what is new. This mode is designed to help here: